Inside the Black Box: Demystifying Machine Learning Models

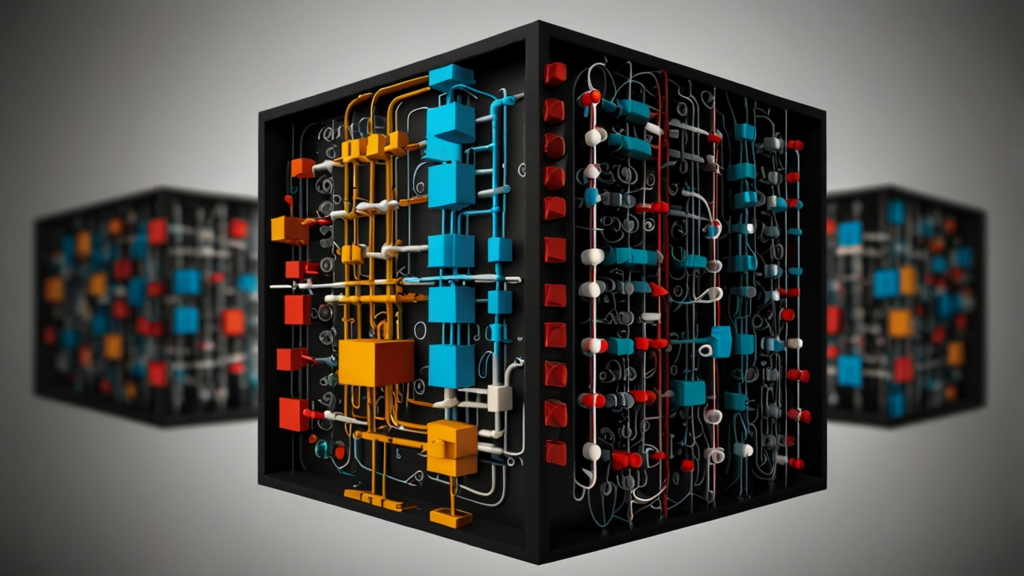

Machine Learning (ML) models are revolutionizing industries, driving innovations in healthcare, finance, transportation, and more. Despite their wide-reaching applications, these models often function as "black boxes" – producing predictions without providing insight into their decision-making processes. This inherent opacity can lead to mistrust and reluctance in adopting ML technologies. To bridge this gap, it's crucial to demystify how these models work and understand their inner mechanisms.

The Anatomy of Machine Learning Models

At its core, a machine learning model is an algorithm that learns from data. The primary aim is to uncover patterns and make predictions or decisions without being explicitly programmed to perform the task. The process typically involves several stages:

- Data Collection: Gathering relevant data that accurately represents the problem domain.

- Data Preprocessing: Cleaning and transforming data to make it suitable for analysis.

- Model Training: Using algorithms to learn patterns from the preprocessed data.

- Model Evaluation: Testing the model's performance on unseen data to ensure its accuracy and robustness.

- Model Deployment: Integrating the model into a real-world system for practical use.

While the steps appear straightforward, the complexity lies in the intricacies of the algorithms and the data itself.

Types of Machine Learning Models

There are several broad categories of machine learning models, each with unique characteristics and use cases:

- Supervised Learning: Models learn from labeled data, which includes input-output pairs. Common algorithms include Linear Regression, Decision Trees, and Neural Networks.

- Unsupervised Learning: Models identify patterns in unlabeled data. Techniques such as Clustering with K-Means and Dimensionality Reduction with PCA fall into this category.

- Reinforcement Learning: Models learn optimal actions through trial and error, receiving feedback in the form of rewards or penalties. Notable algorithms include Q-Learning and Deep Q-Networks (DQNs).

Challenges of Interpretability

Despite the power of machine learning models, one of the significant challenges is their lack of interpretability. Complex models, particularly deep learning neural networks, can consist of millions of parameters, making it difficult to trace how a specific decision was made. This "black box" nature raises several concerns:

“In fields like healthcare and finance, understanding the rationale behind a model's prediction is crucial for ensuring accuracy, fairness, and accountability.”

The lack of transparency can lead to ethical dilemmas and unintended consequences. For instance, if an ML model used for hiring decisions is biased, it could reinforce existing inequalities.

Approaches to Explainable AI

To address the interpretability issue, researchers and practitioners are increasingly focusing on developing explainable AI (XAI). Several approaches are gaining traction:

- Model Simplification: Using simpler models like decision trees that are inherently more interpretable.

- Post-hoc Explanations: Generating explanations for complex models after training. Techniques include LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations).

- Visualization Tools: Employing visualization libraries to help users understand model behavior and feature importance.

These methods aim to make the model's decision-making process more transparent and trustworthy.

The Path Forward

As machine learning continues to evolve, balancing model complexity with interpretability will be critical. Emerging trends like federated learning, which trains models across decentralized devices while preserving privacy, and causal inference, which seeks to identify cause-effect relationships, promise to further enhance the capability and trustworthiness of ML models.

“The future of machine learning hinges not only on making models more powerful but also on making them more understandable and fair.”

Ultimately, demystifying machine learning models is an ongoing journey. By continuing to innovate and prioritize transparency, we can harness the full potential of these technologies while maintaining public trust and ethical integrity.